The Artificial Intelligence (AI) DNA

It is everywhere! What? AI.

But let me start with a question to you, the reader. How smart do you think AI is? Give yourself about 10 seconds before reading my answer and opinion.

10 … 9 … 8 … 7 … 6 … 5 … 4 … 3 … 2 … 1.

Well, I would say AI has no intelligence at all! At least not the human intelligence that we all agree upon. And, what is human intelligence? Human intelligence can be defined as any out-of-the-box innovation that was generated from the knowledge that was taught, or from one’s life experience. For example, the first Neanderthal who decided to cross a river by bridging it with a tree, needed intelligence for his new innovative concept. Whereas, a modern individual who writes Machine Learning codes into Python all day, in the same manner that he/ she was taught (at their university or online), who may also be a hard and studious employee, is not… intelligent!

So back to our question on how intelligent is AI? To give you a proper answer, you should first know what the main pillars of AI are, the reasons behind its revolution, and lastly, but most importantly, the AI DNA.

Where does it all start?

AI is much different than robots serving coffee or playing football, or even self-driven cars. AI refers to anything that technology allows machines to act and behave like humans! And what do humans do? If we consider a simple example of a racecar driver in a rally competition, the driver; 1) takes action by turning left or right, facilitated by their 2) vision, helping in the act of turning left or right and 3) communicates this decision, assisting them in all their manipulations.

Now the above constitutes the three main pillars of AI. Let’s discuss them one by one, and more importantly explore their DNAs!

The 3 Main Pillars of AI

The three main pillars that define AI are:

- Machine Learning for decision making

- Convolution Neural Networks (and family) for vision matters (image recognition)

- NLPs for communication matters (text analysis)

Let us put all the above in a comprehensive, yet realistic case study, integrated with AI.

Case study: The Virtual Doctor!

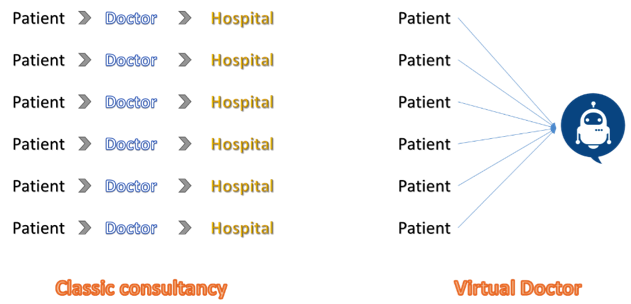

Let us consider a hospital that wants to launch a virtual automated system, such as a “chatbot” or virtual doctor to serve early of patients. The chatbot will try to give advice to thousands of patients, that it consults simultaneously, advising them on which drug to take or not, or refer them to specialized doctors in the event that it fails at its job.

Our AI virtual doctor will be performing accurately, if more Data Scientists are capable of elaborating on the above three main pillars, independently from their order!

Pillar 1: Image Recognition

The first thing our AI system would do is to identify the patient, because AI should consider that not all patients are computer or web literate. Consequently, all consultations would start with the recognition of the patient, through an integrated image recognition system, based on a huge database of facial images and associated names. A “Convolutional Neural Network” smartly coded by humans, would allow the hospital to automatically identity patients, once they sit in front of their devices and engage in a conversation with the virtual doctor.

Next the patient will expect advice from the virtual doctor.

Pillar 2: Natural Language Processing

“Hello Mr. Jones, how can I be helpful to you?” This is the first thing the chatbot would tell the patient. In fact it will be trained, like secretaries, to welcome any new patient to the hospital. “Well, I have this mild throat pain since the past two days” replies Mr. Jones. “Oh it is ok, nothing important, just drink hot tea tonight and you will feel great tomorrow” replies the chatbot. .

The chatbot is trained to mimic a doctor, by analyzing the text it receives from the patient.

The decision of the virtual doctor was possible because the humans (or the coders) together with doctors, analyzed all possible patient scenarios, and then translated them into codes and algorithms (like LSTM), allowing the virtual doctor to extract the information from the text, evaluate the meaning and then generate a response. These solutions with text mining were performed using Natural Language Processing tools.

But how did the virtual doctor tell the patient that “hot tea” was the best medicine? Here comes the role of Machine Learning algorithms.

Pillar 3: Machine Learning

While chatting with the chatbot, Mr. Jones informed the virtual doctor that he was suffering only with mild throat pain. By breaking down this sentence and transforming it into a mathematical equation (more precisely into a Word Vector), it was then sent to a Machine Learning algorithm, which was trained on all possible scenarios (thousands of them!). Now once words like “throat”,“pain” and “mild” or “only” were passed to the algorithm already rained by human intelligence, it was capable of predicting the automated and memorized outcome of Mr. Jones’ diagnosis. The action was then to inform him that he should drink “hot tea”. The model sent his diagnosis via the chatbot/ the virtual doctor.

Well guys, isn’t that a great idea? Serving 1,000,000 patients in parallel and with a single machine that works at the speed of light? The idea is simple, true. But what is actually involved behind it to make it seem that easy?! Fasten your seat belt and let us check it out!

Making things happen with IoT and Big Data

The AI universe would not be possible if there was no IoT and Big Data. The first allows the travelling of data through the web via all tech devices such as mobiles, computers, watches, etc. and the second allows the storage, processing and conveying of data on the cloud.

However, this is not the purpose of this article, therefore we will not be going into it ☺

Pillar 1: Image Recognition

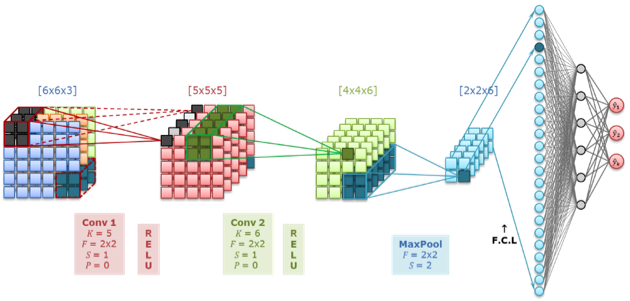

Convolution Neural Networks

These solutions would not be possible if there was no breakthrough improvement in technologies and GRUs processing. The reason being, that image processing requires an enormous task of processing before generating the result.

And the processing necessitates to pass by the below simplified steps.

Step 1: Decomposing the image of the patient into pixels with its 3 color numbering

Step 2: Convoluting over the pixels with filters to find out patterns

Step 3: Convoluting over the first patterns again and again to fine tune them

Step 4: Bringing all the detailed patterns into an Artificial Neural Network to identify the image.

So at the end of the row, the CNN needs an ANN! So what is an ANN?

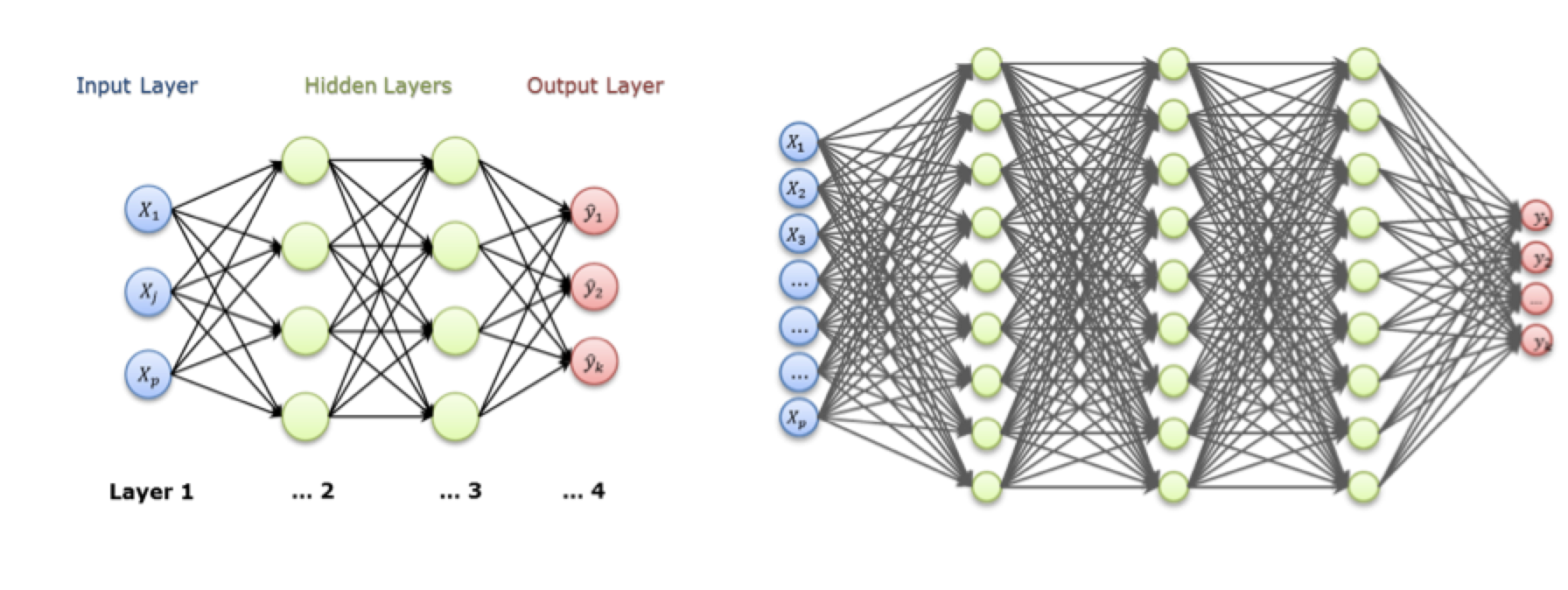

Artificial Neural Networks and Deep Learning

Initiated in the 80s, ANNs did find its glory with the tech revolution.

It is about a model that operates similar to the brain: neurons exchanging information via synapsis to generate an output. The application is simple: you feed it with data, and you let the algorithm find out the optimized predictive model for you … by itself! But “by itself” should not insinuate a human brain like processing! It is about strict codes and algebra smartly blended by humans using already existing programming libraries, and which the machine has zero authority on any modification!

If the selected model for ANN includes many layers and lots of inputs, it is then called Deep Neural Networks or Deep Learning.

Now did ANN pop out from scratch? Surely not. It is the normal evolution of Predictive Machine Learning. They were mainly developed when ML failed regarding accuracy, by implementing most Machine Learning tools into their DNAs.

Pillar 2: Predictive Machine Learning

Introduction

Known also as “supervised” Machine Learning, these algorithms are simple in concept and application with advanced automated tools. They all follow the same 3 steps:

- Input data: pass the complete data set to the algorithm

- Train the model: let it find the equations, or models that best reproduce the original data

- Make prediction: use the obtained model to make predictions

There is a wide range of models, but I will go through the most important ones very briefly.

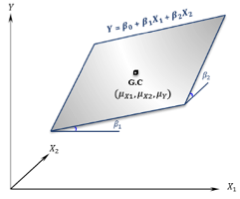

Linear Regressions

These are models that allow you to estimate a quantitative output, like blood pressure, based on the input you feed into the model.

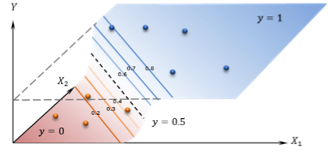

Logistic Regressions

Similar to the previous models, but are used for predicting a qualitative output, like the regularity type of the heart beat after an operation, as well as input data like age, previous illness, blood pressure, etc.

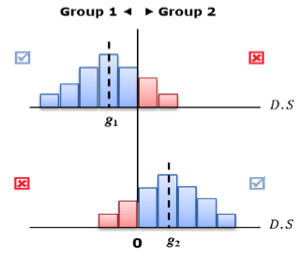

Discriminant Analysis

Like Logistic Regression, DA is used to predict qualitative output as well. But the model itself is a different approach. It can predict the “diagnosis” of a patient in a “geometrical” approach (like shown in the right picture) or in a “probabilistic” one.

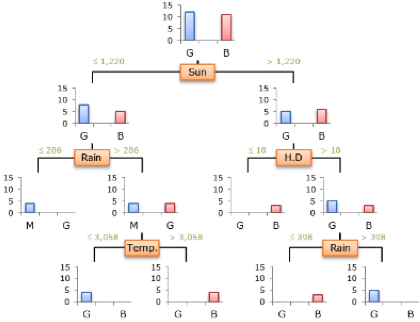

Decision Trees

Can be used to estimate the quantitative output, and qualitative one. They are very powerful and can be used on any kind of data. The concept is that they categorize the output (healthy – not healthy for example) by falling down the tree according to inputs. Very famous DTs are:

- CART

- Random Forest

- AdaBoost

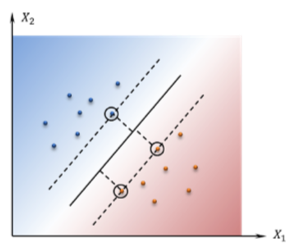

Support Vector Machines

Invented in the 60s, SVMs were put into application with tech facilities. The concept is about finding out the “boundary” that best separates between groups. On the right chart, any new observation will be categorized depending on if it falls on the right or the left side of the line.

It looks easy, but imagine a data with 30 variables! What will be those boundaries?!

K Nearest Neighbors

They have the easiest concept. If you live next to good people, then you are most likely a good person too. That simple ☺

So based on information taken from customers, a company can categorize a new one by checking out the 10 nearest neighbors.

It all starts with Data Analysis?

Can we start learning ML directly? Practically speaking, when you find out that most ML algorithms have all Data Analysis tools within their DNAs, it becomes easier learning them afterwards. Like Neural Networks are an extension of ML, the latter at its turn, is the extension of Data Analysis which includes all the statsitical tests, to name a few:

- t tests

- F Anova

- Chi Square

- Regression coefficient

Pillar 3: Natural Language Processing

Description

In short, NLP is part of the AI that processes text to obtain the right information from a “human’s natural language”. So it is about:

- Computer coding processing text or languages to extract the needed information

- Algorithms that enable computers to derive meaning from humans’ natural language

- Solution that tries to interpret text like any human would do!

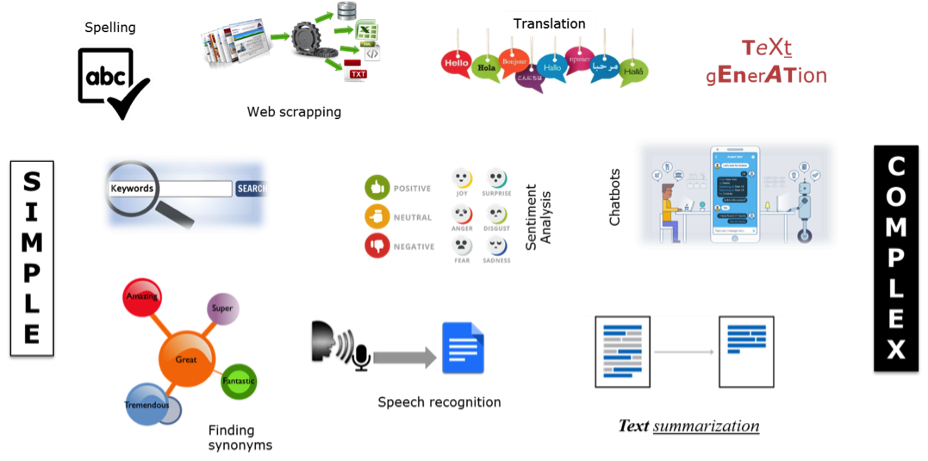

Fields of application

The scope of applications related to NLP is exploding! Below is a list of possibilities, but the sky is the limit. The reason lies behind the fact that NLP science is still at its early stage and under huge research and development process.

NLP with ML and Deep Learning

Now, can we learn NLP without prior knowledge of Machine Learning and Deep Learning models? Partially yes. But claiming a profound understanding for serious application, a thorough comprehension of all the above mentioned Machine Learning algorithms is a must. In fact, most NLP applications pass through the below process:

Step 1: Data preparation

This is the most important phase, where all the text of the corpus should pass by:

- Tokenization

- Stemming

- Lemmatization

- Storing in Document – Matrix format

Step 2: Machine Learning

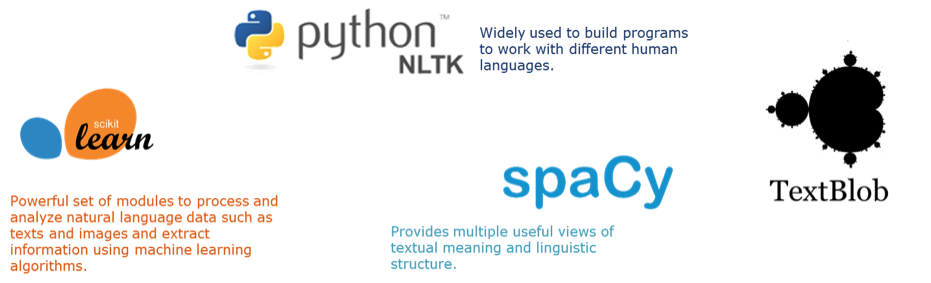

One text is transformed into “1-0” tables, available technologies available in the market facilitates tremendously the quick application of the above and all the ML algorithms. Most used open source libraries can be summarized with the below:

An example on how Machine Learning applies to NLPs, would be being able to tell if the opinion inside a long text is “positive”, if it detects in it words like [happy, nice, entertain etc.] after processing it with one of the above libraries. Or like our virtual doctor detecting words like [throat, pain, mild] and informing the patient with a generated output [drink, tea].

Step 3: Implementation

The last step would be framing all the outputs and results into an appealing application where a UX professional would be playing an important role to make it easy on the user.

Conclusion

So what makes an AI expert a real … expert?

My answer would be that AI expertise is like becoming a piano virtuoso! The key elements of music are not a lot learn: 7 notes and something like 4 series of chords.

But the mixing, the tempo, the spirit is there to compose all the music of the world! After years of practice and improvement, a pianist can become a virtuoso.

The same goes for AI. Once all the components are learned, it is then up to you on how much you want to involve yourself into the applications, and after years of practice and mixing of tools, then you can claim to become an AI expert ☺

But it all starts here, by learning all the tools!

Related Articles

The Power of Data-Driven Decision-Making in Business: A Spectrum from Impulsive to Informed

In the fast-paced business world, decision-making is not merely an occasional…